Ethical Morality: in a World Where Computers are Getting Smarter

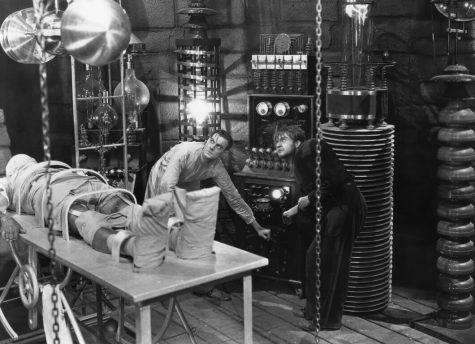

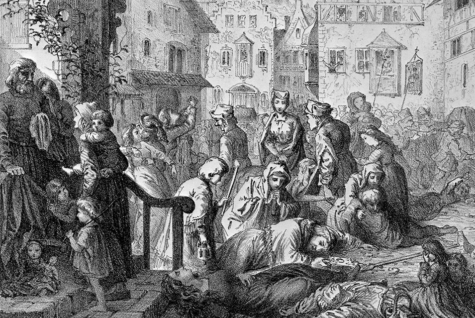

Humans have the unique ability to innovate and create new technologies that will inevitably impact society. Throughout history, the field of science has been moving forward, getting faster as we reach the current day. Sometimes the creators of these technologies exceed their expectations, and that is when we start to question the ethical morality behind these creations. Recent developments in artificial intelligence include new methods of machine learning such as deep learning, imitation learning, and quantum computing; machine learning has also been applied to COVID-19 vaccine research and will help us develop and facilitate robotaxis. Machine learning still has its negative influences on employment, distribution of wealth, and problems in specific medical fields such as radiology; all of these factors that go into computer intelligence encompass a critical question of ethical morality between the engineers, or the computers themselves. In Mary Shelley’s Frankenstein, a similar scenario is seen when Victor Frankenstein brings his monster, the Wretch, to life; who then proceeds to carry out acts of violence within society, and Frankenstein does not take any legal liability for the actions of his creation.

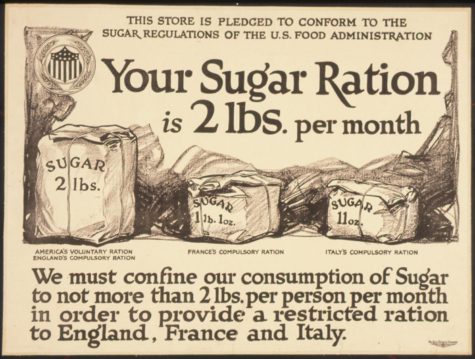

Computers are complex, and in the last few decades, technology has seen entirely new applications alongside new computing methods. And the field will continue to evolve in the future, as it did thirty years ago. Artificial intelligence has helped researchers with COVID-19 vaccine development, in a world experiencing the effects of a pandemic, under normal circumstances, it would take several years for researchers to devise, test, and produce vaccinations that are proven safe and effective for patients across the globe. Chinese multinational technology company, Baidu, licensed its machine learning algorithm, LinearFold to research laboratories across the world, to aid in the formulation of a vaccine. A virus is a pathogen containing thousands of subcomponents, and scientists have to identify the ones that are the most immunogenic although, with the help of LinearFold, scientists can gather large amounts of SARS-CoV-2 data and recognize which subcomponents are most likely to trigger an immune response in just 30 seconds. This is significantly faster than traditional research methods, which can take up to 120 times longer than usual.

Alongside the help of COVID-19 vaccinations, developers are creating new methods of machine learning such as deep learning, and quantum computing; both of which make computers able to mimic human behavior to carry out actions faster. Over the past year, quantum computing has made significant progress in having the ability to strengthen A.I. applications, opposed to standard binary-based algorithms. “Quantum computing could be used to run a generative machine learning model through a larger dataset than a classical computer can process, this makes the model more accurate and more useful in real-world settings” (Baidu “These five AI developments will shape 2021 and beyond”). Alongside the development of quantum computing, computer scientists have been working on new possibilities with deep learning. Deep learning in artificial intelligence, as described by Micheal Copeland, author of “What’s the Difference Between Artificial Intelligence, Machine Learning and Deep Learning?” “Deep learning has enabled many practical applications of machine learning and by extension of the overall field of AI. Deep learning breaks down tasks in ways that make all kinds of machine assists seem possible, even likely” (Copeland). New developments in machine learning have the potential to bring new technologies to the table, such as autonomous cars, preventative healthcare, and even better movie recommendations, that tailor to individual preferences in film genres. The possibilities with deep machine learning are endless: “With Deep learning’s help, A.I. may even get to that science fiction state we’ve so long imagined” (Copeland “What’s the Difference Between Artificial Intelligence, Machine Learning, and Deep Learning?”).

Alongside the developments with imitation and deep learning, artificial intelligence is utilized in general transportation; where self-driving cars can achieve levels four and five in autonomy. Robotaxis are great examples of artificial intelligence in cars, and the technology has continued to develop throughout the past few years and is continuing to be perfected as we speak. Electric car manufacturers such as Tesla (who is also promising robotaxis) are actively testing A.I.-assisted software in their vehicles through the Full Self Driving Beta; readily available to Tesla drivers who are willing to sign up. Full Self Driving (commonly referred to as “F.S.D. ”) uses imitation methods; that utilize information such as driving habits and driving regulations from humans and teach them to the F.S.D. computer in the car. Andrej Karpathty (senior director of artificial intelligence at Tesla) explains: “While you are driving a car what you’re actually doing is entering the data because you are steering the wheel. You’re telling us how to traverse different environments… We train neural networks on those trajectories, and then the neural network predicts the paths just from that data” (Karpathy). The implication of imitation learning into artificial intelligence is allowing computers to learn faster than ever before, and mimic accurate human behavior in many different scenarios.

Artificial intelligence has developed and evolved in significant ways over the past decade, and we are yet to witness what will happen in the next decade still to come. With new fields emerging or getting improved on, such as deep learning, imitation learning models, and quantum computers, A.I. is evolving quite rapidly; however, there are some limitations to the system of computers. Large reliance on data from interconnected organizations, as well as the combined exploitation of data from various sources results in a global competition for information between various data firms and companies. Alongside the misuse of user data, artificial intelligence cuts out the middleman in the world of online retail and commerce. Online retail companies such as Amazon, eBay, and even Walmart have increased their reliance on machines to conduct business. And with the rapid growth and development of machine learning, there will be fewer jobs for cashiers at stores, shipping companies, and delivery drivers: “Look at trucking: it currently employs millions of individuals in the United States alone. What will happen to them if the self-driving trucks [Tesla Semi] promised by Tesla’s Elon Musk become widely available in the next decade?” (Boseman “Top 9 ethical issues in artificial intelligence”).

Artificial intelligence is also commonly used in the biomedical field, particularly, radiology. Radiologists use autoencoders that provide quantitative data, similar to medical imagery, X-rays, C.A.T. scans, and M.R.I’s. These data predictions are provided quantitatively, as opposed to qualitatively. “Historically, in radiology practice, trained physicians visually assessed medical images for the detection, characterization, and monitoring of diseases” (Hosny “Artificial intelligence in radiology”). Errors are encountered when the data autoencoders overanalyze the data being presented, resulting in confusing conclusions which could potentially delay or hinder the medical care given to a patient.

Morality and ethical consideration are two theories that share a common similarity: righteousness. And with the growth and development that leads to computers making decisions on their own, a question arises concerning “who” the ethical liability falls applies to in the event of an ethics violation, the computer scientists, or the actual artificial intelligence algorithm itself. Notable events of AI algorithmic computers misbehaving are great examples of how machine learning can influence today’s society. Back in 2017, Facebook AI had created chatbots solely to negotiate with users and bring them to a resolution to their conflict, however, the two robots (nicknamed Bob and Alice) began to communicate with each other in a dialect loosely based around the English language. “…these robots creating their own sinister coded language, along with incomprehensible snippets of intriguing exchanges between the two of them” (Robertson “This is how Facebook’s shut-down AI robots developed their own language – and why it’s more common than you think”). While there was no harm committed within this situation, to the human race, Facebook AI ended up terminating their AI robot research program; for reasons that the robots had deviated from standard English. In this particular situation, neither Facebook AI, Bob, or Alice were blamed for their actions. And the confusion around the moral aspects of machine learning is resulting from artificial intelligence constantly evolving.

Tesla’s advancements within machine learning rely on imitation learning techniques that attempt to make the onboard computer accurately mimic human behavior, used in the Full Self Driving (and the Full Self Driving Beta) aspects of their vehicles. One of the features includes “Smart Summon”, a modified version of the original functionality of having the car “Summon” out of a garage. Smart Summon allows a vehicle compatible with Full Self Driving to pull out of a parking spot, navigate through parking-lot traffic and pedestrians, and find the driver on demand. However, with this technology comes growing concerns with insurance companies as well as local authorities on who to blame in the event of damage caused to the property of others, pedestrians, or public property. According to Tesla, who released liability information after their software v.10 debuted: “You [the driver] are still responsible for your car and must monitor it and its surroundings at all times within your line of sight because it may not detect all obstacles. Be especially careful around quick-moving people, bicycles, and cars” (Tesla). There appear to be lots of grey-area within this statement as certain elements such as “within your line of sight” reasonably imply that the car can navigate on its own, however, the uncertainty behind “You are still responsible” does not invoke confidence with Smart Summon.

Artificial intelligence and the methods in which machine learning can develop and improve over time have come a long way. A world that is rapidly evolving to heavily rely on computers, as well as creating new uses for computers, A.I. will be considered as one of the most foundational aspects of society and how humans interact with one another. Current developments in machine learning such as deep learning, imitation learning, and quantum computing are the epitome of the positive influences that artificial intelligence has had on society, however, we must consider the negative aspects such as AI’s influence on employment, equal pay, as well as skill level in the medical workplace; to get a clearer picture of what a world completely dominated by computers could look like. The moral aspects within the ethical liability linked to the actions of artificial intelligence are yet to be discerned and have attracted the attention of millions of people who are experiencing one of the greatest revolutions in the history of the world, right before their eyes. The dangers of uncontrolled growth of computer intelligence has even been recognized by popular public figures such as Elon Musk: “Mark my words — A.I. is far more dangerous than nukes”.

Works Cited.

29, July, and Michael Copeland. “The Difference Between AI, Machine Learning, and Deep Learning?: NVIDIA Blog.” The Official NVIDIA Blog, 6 Nov. 2019, blogs.nvidia.com/blog/2016/07/29/whats-difference-artificial-intelligence-machine-learning-deep-learning-ai/.

Anandhumar , Anima, et al. “The Potential Dangers of Artificial Intelligence for Radiology and Radiologists.” Google Scholar , Database , 17 Apr. 2020, www.jacr.org/article/S1546-1440(20)30403-8/abstract.

Baidu. “These Five AI Developments Will Shape 2021 and Beyond.” MIT Technology Review, MIT Technology Review, 24 Feb. 2021, www.technologyreview.com/2021/01/14/1016122/these-five-ai-developments-will-shape-2021-and-beyond/.

Chukwudozie, Onyeka S., et al. “Attenuated Subcomponent Vaccine Design Targeting the SARS-CoV-2 Nucleocapsid Phosphoprotein RNA Binding Domain: In Silico Analysis.” Journal of Immunology Research, Hindawi, 17 Sept. 2020, www.hindawi.com/journals/jir/2020/2837670/.

“Facebook Shut Robots down after They Developed Their Own Language. It’s More Common than You Think.” The Independent, Independent Digital News and Media, 2 Aug. 2017, www.independent.co.uk/voices/facebook-shuts-down-robots-ai-artificial-intelligence-develop-own-language-common-a7871341.html.

Hosny , Ahmed, et al. “Artificial Intelligence in Radiology.” Google Scholar , Database , 17 May 2018, www.nature.com/articles/s41568-018-0016-5.

How AI Could Our Secret Weapon in Combating Vaccine Hesitancy, www.deepscribe.ai/resources/how-ai-could-our-secret-weapon-in-combatting-vaccine-hesitancy.

Lambert, Fred, and Fred Lambert@FredericLambertFred is the Editor in Chief and Main Writer at Electrek.You can send tips on Twitter (DMs open) or via email: [email protected] Zalkon.com. “Driverless Tesla Gets Pulled over by Police on ‘Smart Summon’ – Staged or Not?” Electrek, 3 Oct. 2019, electrek.co/2019/10/03/tesla-driverless-pulled-over-police-on-smart-summon/.

Makridakis , Spyros. “The Forthcoming Artificial Intelligence (AI) Revolution: Its Impact on Society and Firms.” Google Scholar , Database , June 2017, www.sciencedirect.com/science/article/abs/pii/S0016328717300046.

the Mind Tools Content Team By the Mind Tools Content Team, and the Mind Tools Content Team. “Dealing With Guilt : Gaining Positive Outcomes From Negative Emotions.” Stress Management From MindTools.com, www.mindtools.com/pages/article/dealing-with-guilt.htm#:~:text=You%20might%20feel%20guilty%20because,what%20you%20have%20done%20well.

Misselhorn, Catrin. “Artificial Morality. Concepts, Issues and Challenges.” Google Scholar , Database , 15 Feb. 2018, link.springer.com/article/10.1007/s12115-018-0229-y.

Mosic, Ranko. “Tesla Full Self Driving Beta Rollout - Imitation Learning.” Medium, Medium, 28 Dec. 2020, ranko-mosic.medium.com/tesla-full-self-driving-beta-rollout-imitation-learning-8a3323d6c025.

Thomas, Mike. “6 Dangerous Risks of Artificial Intelligence.” Built In, builtin.com/artificial-intelligence/risks-of-artificial-intelligence.

Julia Bossmann, Alumni. “Top 9 Ethical Issues in Artificial Intelligence.” World Economic Forum, www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artificial-intelligence/.